about me

Hi! I'm an MEng at MIT CSAIL advised by Pulkit Agrawal.

I'm interested in building useful, dexterous robots. To navigate our world's complexity and unpredictability, we need AI systems that continuously adapt and improve beyond their training. Therefore, I'm excited about harmonizing learning at scale with reasoning, search, and planning.

I'm really proud of EvoGym, a tool for soft robot design and control co-optimization which was featured in Scientific American, Wired, MIT News, and IEEE Spectrum!

Outside of research, I co-founded Momentum AI, an education nonprofit dedicated to teaching AI to underserved high-schoolers. I also enjoy making, running, playing Avalon, and inventing bad puns.

news

DexHub, a platform for internet-scale robot data collection, accepted to two CoRL workshops.

Co-founded Ember ML.

Accepted position as Machine Learning Researcher @ Scale AI for Summer ‘22.

Won $175K and 2nd Place in the Regeneron Science Talent Search, a research and science competition for high school seniors.

projects

Scaling Robot Data Collection with Augmented Reality

How do we collect an internet-scale robotics dataset? The logistics of real-world data collection make scaling difficult, requiring expensive robot hardware, physical environments, and time-consuming scene resets. DexHub is a simulation-based robotics crowdsourcing platform that only requires a VR headset. Compared to alternatives, non-experts can collect data x2 faster with DexHub. Leveraging the flexibility of simulation, policies trained with DexHub are more robust than their real-world counterparts. Try it with Apple Vision Pro!

Building A Tool for Soft Robot Design and Control Co-optimization

Evolution Gym is the first benchmark tool for studying soft robot co-optimization: jointly optimizing a robot’s body and brain. We built EvoGym to have 1) A fast soft-body simulator 2) OpenAI Gym inspired python interface and 3) over 30 environments in order to overcome the prior intractability and irreproducibility of soft robot co-design experiments. In addition, we proposed 3 SOTA co-design algorithms and benchmarked them extensively on all EvoGym environments to establish baseline performance in this field. We hope EvoGym will lead to the development of increasingly intelligent robots! Code and extensive docs are available!

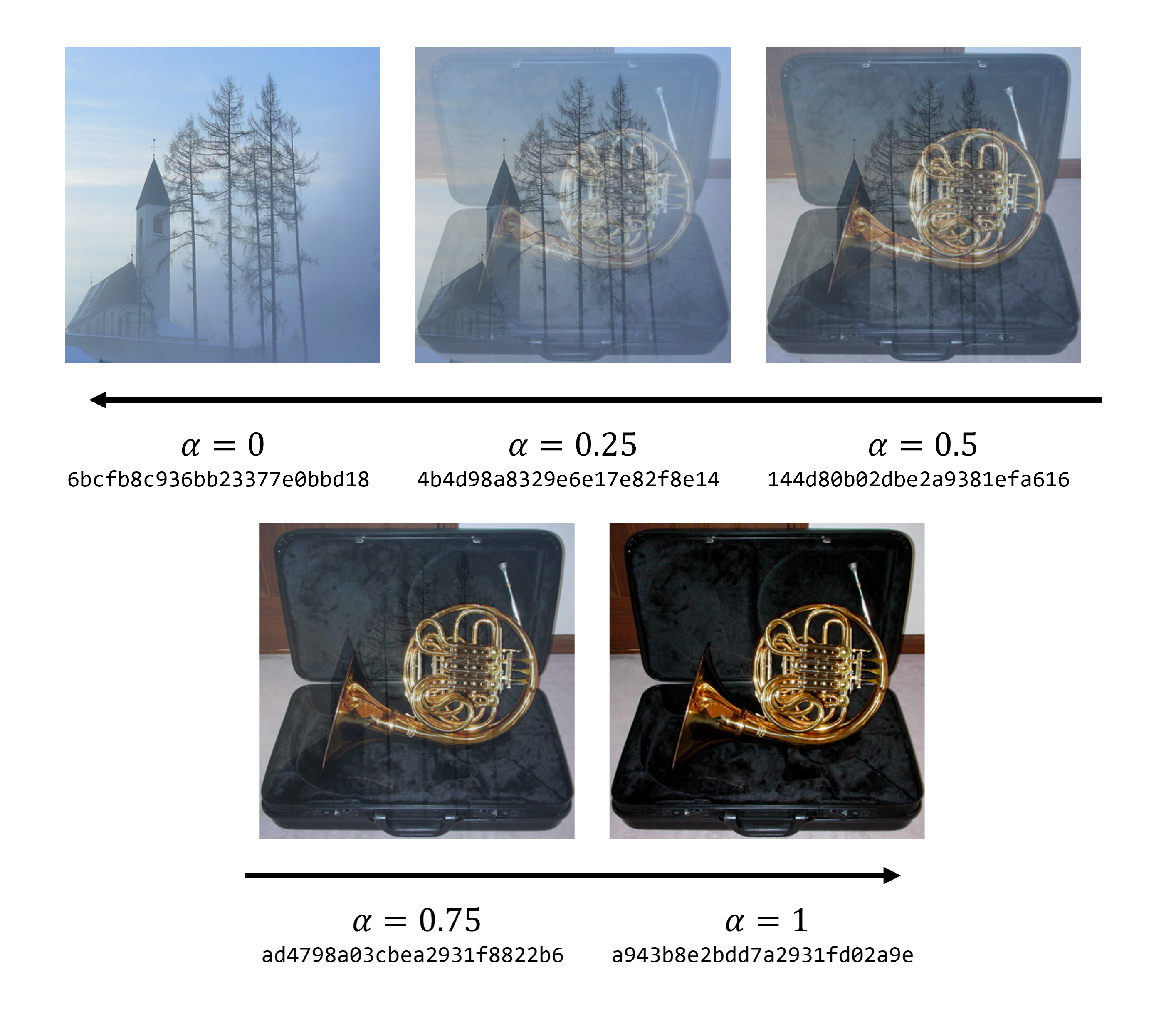

Breaking Apple's NeuralHash through Approximate Linearity

Presented at the 2022 ML4Cyber workshop at ICML.

Perceptual hashes map images with identical semantic content to the same n-bit hash value, and otherwise assign images to different hashes. Apple’s NeuralHash is one such system aiming to detect illegal content on users’ devices without compromising consumer privacy. We make the surprising discovery that NeuralHash is approximately linear, which inspired the development of novel black-box attacks that can (i) evade detection of ‘illegal’ images, (ii) generate near-collisions, and (iii) leak information about hashed images, all without access to model parameters. These vulnerabilities pose serious threats to NeuralHash’s security goals; to address them, we propose a simple fix using classical cryptographic standards.

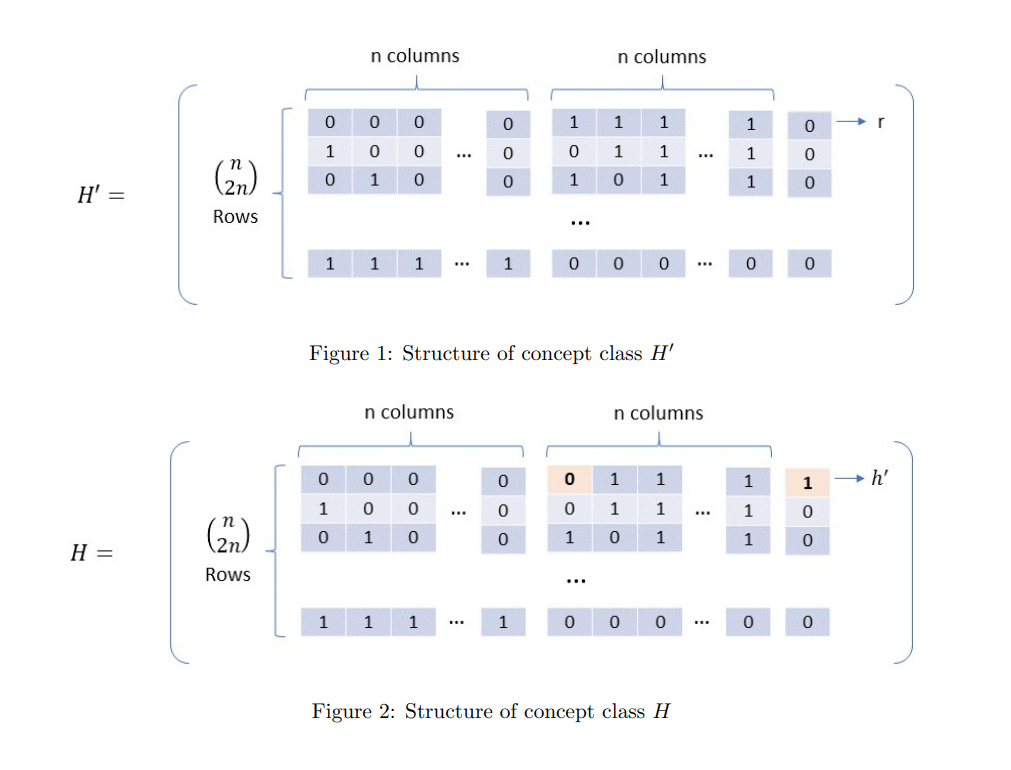

Improving Machine Learning Algorithms for PAC Learning

We designed and analyzed novel, simple, and efficient algorithms for interactively learning non-binary concepts in the learning from random counter-examples (LRC) model. Here, learning takes place from random counter-examples that the learner receives in response to their proper equivalence queries, and the learning time is the number of counter-examples needed by the learner to identify the target concept. Such learning is particularly suited for online ranking, classification, clustering, etc., where machine learning models must be used before they are fully trained.

making

3D Modular Origami

Fold. Crease. Fold. Shape. Repeat. Each model is made with anywhere from 100-1000 individually crafted pieces. It’s time-consuming, but also quite relaxing!

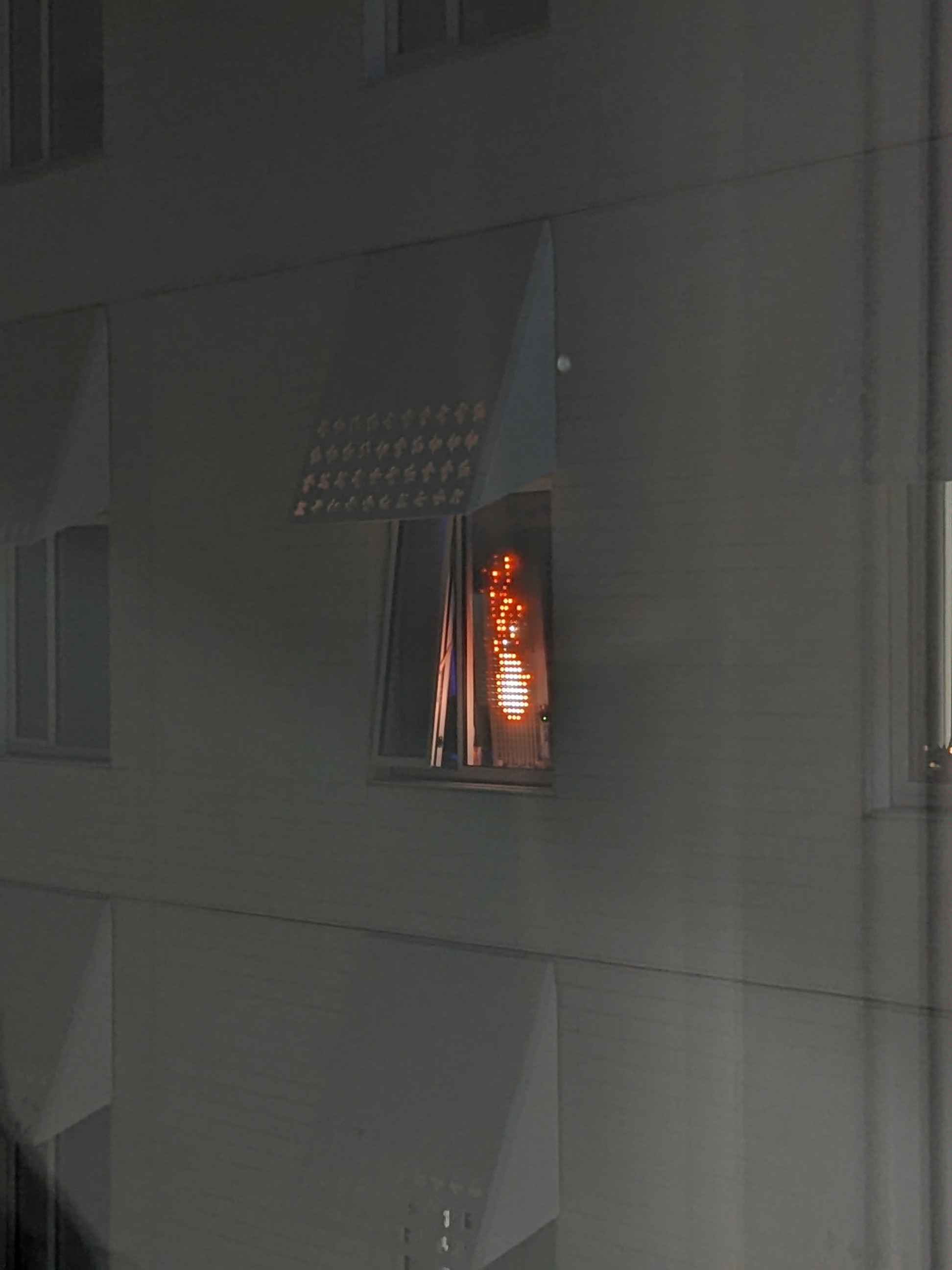

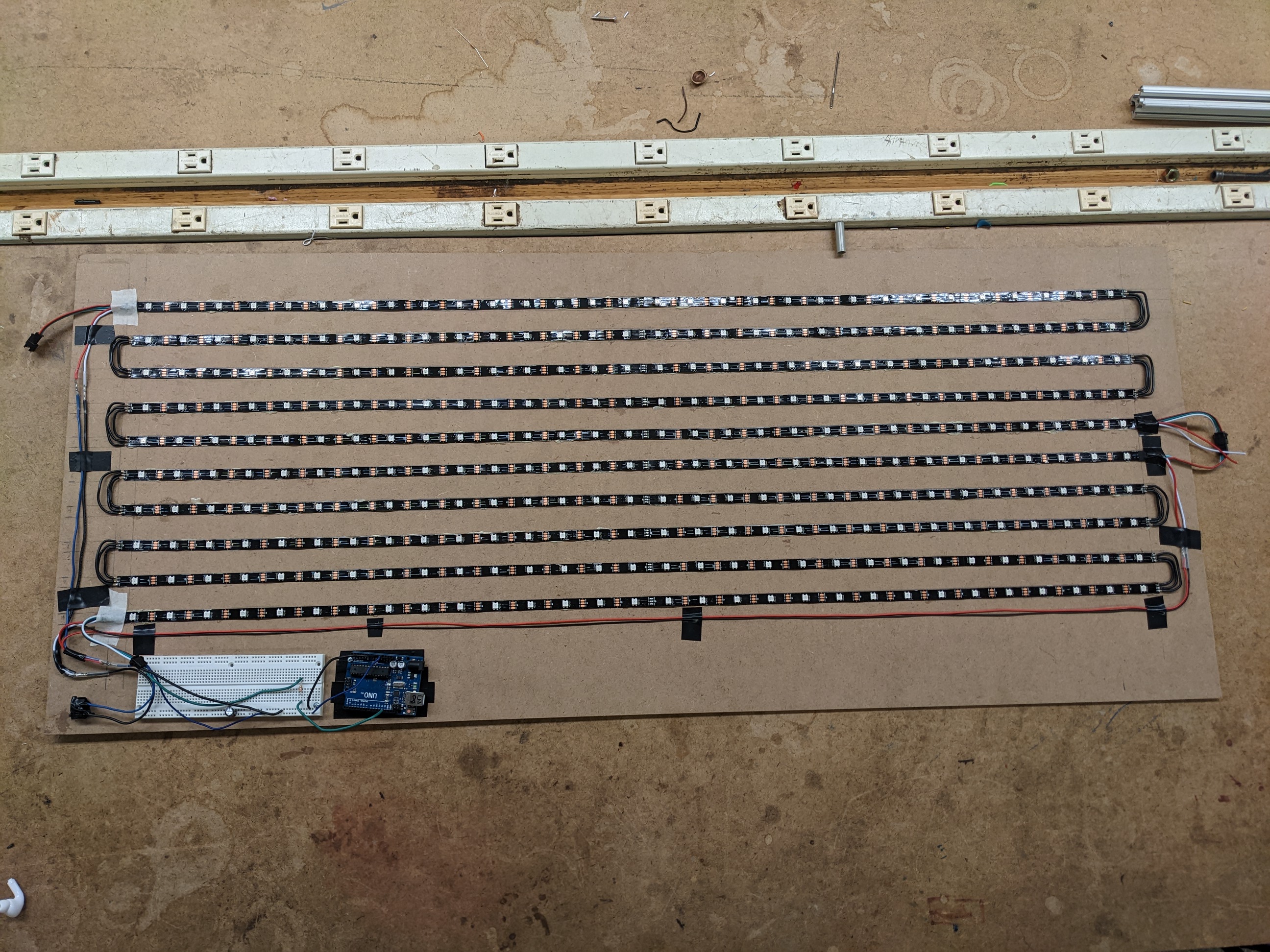

LED Display Board

My first ever project in a MIT makerspace! The display is a 30x10 grid of individually addressable WS2812B LEDs controlled by an Arduino UNO – meaning I can display nearly any low-res image or gif. It’s facing the street from my New Vassar window for all of MIT to see :D

Raised Garden Beds

Built some raised garden beds for my backyard. This was during COVID so I had a lot of time on my hands… We’ve since grown lots of veggies, but the tomatoes always turn out best!